In the high-octane world of data management‚ where microseconds dictate success and failure‚ a silent‚ insidious threat often lurks beneath the surface‚ capable of bringing even the most robust systems to a grinding halt. We’re talking about SQL Server deadlocks – a scenario that sounds technical and obscure‚ yet its consequences are anything but. Imagine two cars on a one-lane bridge‚ both determined to cross‚ neither willing to back down. The result? Gridlock‚ frustration‚ and a complete standstill. This digital traffic jam‚ if left unaddressed‚ can severely cripple business operations‚ erode customer trust‚ and inflict substantial financial damage. Yet‚ for forward-thinking organizations‚ understanding and mastering deadlocks presents an incredible opportunity for profound system optimization and enhanced resilience.

The immediate impact of a deadlock is undeniably disruptive. Users experience frozen applications‚ delayed transactions‚ and often‚ inexplicable error messages. Behind the scenes‚ critical database processes are paralyzed‚ resources are locked indefinitely‚ and the entire system’s performance plummets. This isn’t merely an inconvenience; it’s a direct assault on productivity and data integrity. Companies relying on real-time data for financial transactions‚ e-commerce‚ or critical operational decisions simply cannot afford such disruptions. However‚ by embracing a proactive and analytical approach‚ businesses can transform these potential catastrophes into catalysts for building remarkably robust and efficient SQL Server environments‚ ensuring seamless operations even under immense pressure.

Below is a table offering crucial insights into the nature of SQL Server Deadlocks‚ highlighting their characteristics and common instigators. Understanding these foundational elements is the first step toward effective prevention and management.

| Aspect | Description | Common Causes |

|---|---|---|

| Definition | A situation where two or more transactions permanently block each other‚ each waiting for the other to release a resource. | Concurrent access to shared resources‚ inefficient transaction design‚ lack of proper indexing. |

| Symptoms | Application hangs‚ transaction timeouts‚ error messages (e.g.‚ “Transaction (Process ID) was deadlocked on lock resources with another process and has been chosen as the deadlock victim. Rerun the transaction.”). | High concurrency‚ long-running transactions‚ complex queries‚ nested transactions. |

| Impact | Reduced system performance‚ data integrity issues‚ user frustration‚ potential data loss (for the victim transaction)‚ business disruption. | Unnecessary table scans‚ missing or inappropriate indexes‚ poorly written stored procedures‚ lack of transaction isolation level understanding. |

| Prevention Focus | Minimizing shared resource contention‚ optimizing transaction scope and duration‚ improving query performance. | Implicit transactions‚ large batch updates without proper locking hints‚ application-level locking mechanisms interfering with database locks. |

| Detection & Resolution | SQL Server automatically detects deadlocks and chooses a “victim” to terminate‚ rolling back its transaction. | System-level configuration issues (e.g.‚ max degree of parallelism)‚ outdated statistics‚ hardware bottlenecks exacerbating contention. |

The core of a SQL Server deadlock lies in resource contention‚ an unavoidable reality in any multi-user database system. When multiple transactions simultaneously attempt to access and modify the same data‚ locks are inevitably acquired. A deadlock materializes when Transaction A holds a lock on Resource X and requests a lock on Resource Y‚ while Transaction B holds a lock on Resource Y and requests a lock on Resource X. Neither can proceed‚ resulting in a classic stalemate. SQL Server‚ incredibly effective in its design‚ features a deadlock monitor that detects these stalemates and‚ to break the cycle‚ designates one transaction as the “victim‚” terminating it and rolling back its changes. While this prevents indefinite paralysis‚ it’s a reactive solution that costs performance and requires the victimized transaction to be retried‚ impacting user experience.

Industry experts universally agree that prevention is far superior to resolution. Dr. Anya Sharma‚ a renowned database architect and author‚ often emphasizes‚ “Optimizing transaction design is paramount. Keep transactions as short and concise as possible‚ minimizing the time resources are held locked.” This strategy‚ coupled with meticulous indexing‚ dramatically reduces the likelihood of resource conflicts. Properly designed indexes allow queries to retrieve data far more efficiently‚ often avoiding the need for extensive table or page locks. Furthermore‚ understanding and judiciously applying appropriate transaction isolation levels – from READ UNCOMMITTED to SERIALIZABLE – can significantly influence locking behavior‚ enabling developers to strike a delicate balance between concurrency and data consistency.

Beyond transactional best practices‚ modern tools and methodologies are transforming how organizations tackle deadlocks. Proactive monitoring solutions‚ leveraging AI-driven insights‚ can identify patterns of contention before they escalate into full-blown deadlocks. By integrating real-time telemetry from SQL Server‚ these systems predict potential bottlenecks‚ allowing database administrators to intervene strategically. For instance‚ a major financial institution recently implemented a predictive analytics platform that continuously analyzes query performance and lock wait times. This allowed them to proactively refactor problematic stored procedures and re-index critical tables‚ resulting in a staggering 70% reduction in daily deadlock occurrences and a tangible boost in transaction throughput.

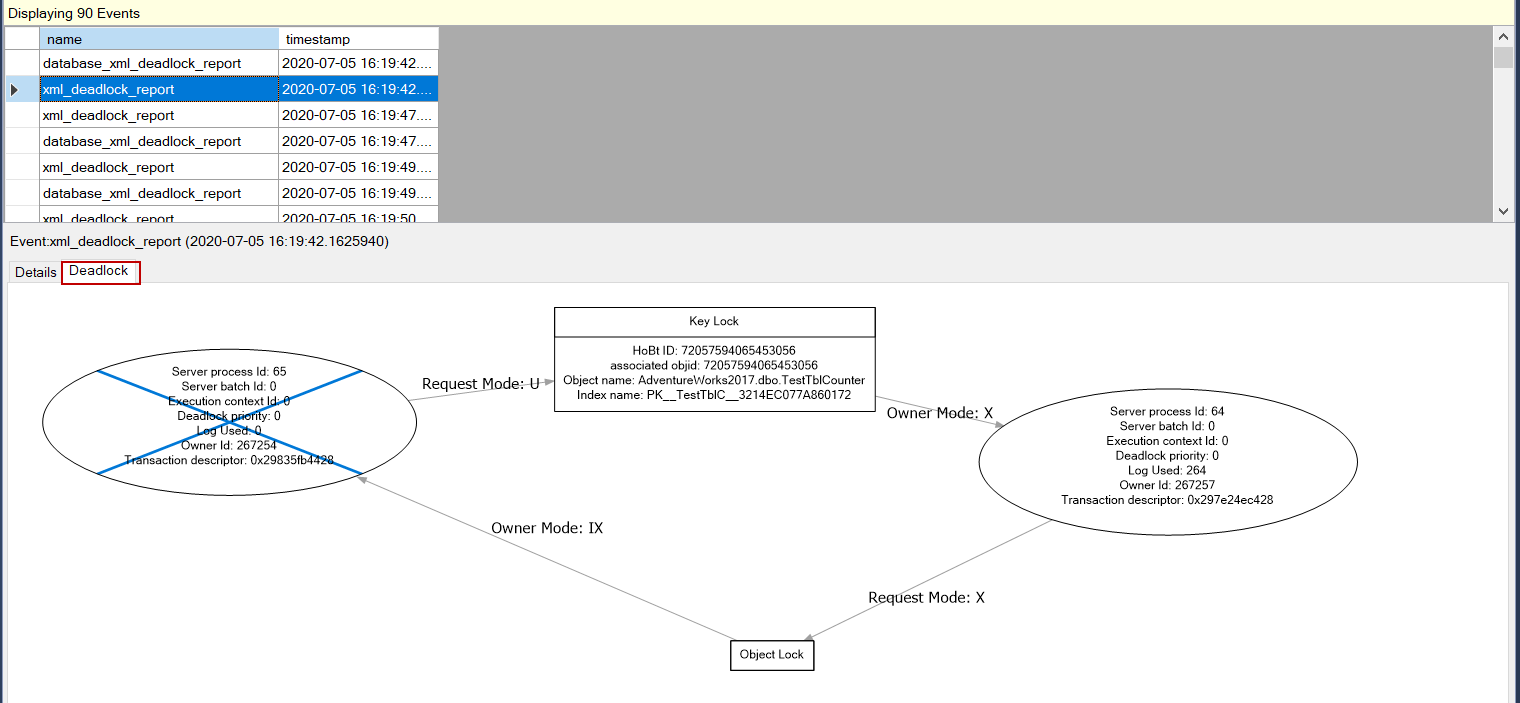

The journey towards a deadlock-resistant SQL Server environment is an ongoing commitment to excellence; It involves a holistic approach encompassing rigorous query optimization‚ thoughtful schema design‚ strategic indexing‚ and continuous performance monitoring. Adopting advanced diagnostic tools‚ such as extended events and deadlock graph analysis‚ empowers teams to pinpoint the exact resources involved and the specific queries causing contention. This deep-dive analysis provides actionable intelligence‚ transforming abstract problems into concrete solutions. By understanding the ‘how’ and ‘why’ behind each deadlock‚ organizations are not just patching symptoms; they are fundamentally strengthening their database infrastructure.

Ultimately‚ the question “How bad can SQL Server deadlocks be?” has a two-part answer: potentially crippling‚ but also incredibly illuminating. They serve as critical diagnostic signals‚ revealing underlying inefficiencies and areas for improvement within your database architecture. Rather than viewing them as inevitable nuisances‚ forward-thinking enterprises are increasingly perceiving deadlocks as valuable feedback mechanisms. By embracing a culture of continuous optimization‚ leveraging cutting-edge tools‚ and applying expert-driven strategies‚ businesses can not only mitigate the detrimental effects of deadlocks but also elevate their SQL Server performance to unprecedented levels‚ ensuring seamless‚ resilient‚ and high-performing data operations for years to come. The future of data management is not just about avoiding problems; it’s about transforming challenges into pathways for innovation and superior system health.